About

I'm an Assistant Professor of Computer Science at Harvard SEAS where I lead the Data-Centric Machine Learning (DCML) group. I'm also an Associate Faculty at the Kempner Institute, and have affiliations with the Center for Research on Computation and Society and the Harvard Data Science Initiative. I am also a researcher at Microsoft Research New England.

In my group, we study how data—not just models—shapes the behavior and reliability of AI systems. Our research develops foundational principles and methods for characterizing, transforming, and optimizing datasets to make learning more efficient, interpretable, and adaptive. By treating datasets as structured, dynamic entities rather than static inputs, we aim to build a rigorous science of data for machine learning: a framework that formally connects data properties to model outcomes. In other words, we seek to reivindicate the role of data in AI and ML, recognizing the reality that in this day and age data is often the most scarce, valuable, and manipulable resource in learning systems, yet we still lack a systematic understanding of how to harness it effectively, robustly, and predictably.

Our work integrates tools from optimal transport, information theory, and generative modeling, and applies them across domains including scientific data, language, and vision. For example, we have developed methods based on optimal transport to align datasets from different domains, compare complex data distributions, generate synthetic data that preserves key characteristics, and transform datasets to improve model robustness. We have also explored techniques for interpreting black-box models, enhancing their trustworthiness through robust explanations, and incorporating human-centered design principles.

If you share these goals, please reach out. We are always looking for collaborators and new group members!

Prospective lab members: If you are interested in joining my group at Harvard, please read this.

Bio

I obtained a PhD in computer science from MIT, where I worked at CSAIL on various topics in machine learning and natural language processing. I also hold BSc (Licenciatura) and MS degrees in mathematics from ITAM and Courant Institute (NYU), respectively. During the latter, I worked on semidefinite programming for domain adaptation for my thesis. Between Master's and PhD, I spent a year at IBM's T.J. Watson Research Center, working in the Speech Recognigtion and NLP teams.

News

- [12/06/2025] Giving a invited talk at UniReps Workshop @ NeurIPS 2025. Slides

- [08/25/2025] Paper: Continuous Language Model Interpolation for Dynamic and Controllable Text Generation led by Sara Kangaslahti accepted to TMLR.

- [08/20/2025] Paper: Investigating the interaction of linguistic and mathematical reasoning in language models using multilingual number puzzles led by Antara Bhattacharya accepted to EMNLP 2025.

- [08/20/2025] Paper: Data Drives Unstable Hierarchical Generalization in LMs led by Sunny Qin accepted to EMNLP 2025.

- [07/08/2025] Giving a keynote talk at AstroAI Workshop 2025.

- [07/08/2025] Paper: To Backtrack or Not to Backtrack: When Sequential Search Limits Model Reasoning led by Sunny Qin accepted to COLM 2025.

- [05/20/2025] Paper: What is the Right Notion of Distance between Predict-then-Optimize Tasks? led by Paula Rodriguez-Diaz accepted to UAI 2025.

- [03/19/2025] OTDD has been incorporated into the DataSimilarity R package.

- [01/22/2025] Paper: DDEQs: Distributional Deep Equilibrium Models through Wasserstein Gradient Flows led by Jonathan Geuter accepted to AISTATS 2025.

- [01/22/2025] Paper: Mixture of Parrots: Experts improve memorization more than reasoning led by Samy Jelassi accepted to ICLR 2025.

- [09/27/2024] Our son Noah was born!

- [09/26/2024] Paper: A Label is Worth a Thousand Images in Dataset Distillation led by Sunny Qin accepted to NeurIPS 2024.

- [07/26/2024] Giving a talk at ICML 2024 'Foundation Models in the Wild' workshop.

- [07/23/2024] Attending ICML 2024 in Vienna. Our group is presenting work on LLMs, Optimal Transport, Model Interpolation and more!

- [06/26/2024] Joined the Kempner Institute as Affiliate Faculty.

- [05/01/2024] Paper: Tag-LLM: Repurposing General-Purpose LLMs for Specialized Domains led by MSR Summer Intern Junhong Shen accepted to ICML 2024.

- [05/11/2023] Will be serving as AC for ACML 2023.

- [05/08/2023] Paper: Generating Synthetic Datasets by Interpolating along Generalized Geodesics led by MSR Summer Intern Jiaojiao Fan accepted to UAI 2023.

- [04/24/2023] Paper: InfoOT: Information Maximizing Optimal Transport led by MSR Summer Intern Ching-Yao Chuang accepted to ICML 2023.

- [02/28/2022] Will be serving as AC for NeurIPS 2023.

- [02/24/2023] Paper: Domain adaptation using optimal transport for invariant learning using histopathology datasets led by MSR Summer Intern Kia Falahkheirkhah accepted to MIDL 2023.

- [10/06/2022] New Preprint: InfoOT: Information Maximizing Optimal Transport.

- [09/30/2022] New Preprint: Neural Unbalanced Optimal Transport via Cycle-Consistent Semi-Couplings.

- [09/15/2022] Paper: Are GANs overkill for NLP? accepted to NeurIPS 2022.

- [07/15/2022] Paper: Optimizing Functionals on the Space of Probabilities with Input Convex Neural Networks accepted to TMLR.

- [07/06/2022] Anna Yeaton will be preseting our work on Optimal Transport for Histopathology at MIDL 2022.

- [05/16/2022] Giving a talk at the IMSI Workshop on Applied Optimal Transport: 'Machine Learning in the Space of Datasets: an Optimal Transport Perspective'

- [05/14/2022] Will be serving as AC for ACML 2022.

- [03/18/2022] Giving a talk at the AMS Special Session on Maths of Data Science: 'Principled Data Manipulation with Optimal Transport'

- [01/31/2022] I will be serving as associate chair for ICML 2022.

- [12/06/21-12/14/21] 'Attending' NeurIPS. Presenting 'JKO-ICNN' at the OTML workshop.

- [11/15/2021] Guest lecture on NLP/Language Models at Harvard's CS182.

- [10/28/2021] Talk: 'Principled Data Manipulation with OT', BIRS-CMO workshop on 'Geometry & Learning from Data'.

- [08/21/2021] Paper: From Human Explanation to Model Interpretabilty: A Framework Based on Weight of Evidence accepted to HCOMP 2021.

- [07/23/2021] Talk: 'Comparing, Transforming, and Optimizing Datasets with OT', ML4Data workshop at ICML.

- [06/01/2021] New Preprint: Optimizing Functionals on the Space of Probabilities with Input Convex Neural Networks.

- [05/08/2021] Paper: Dataset Dynamics via Gradient Flows in Probability Space accepted to ICML 2021.

- [03/20/2021] Reviewer award at ICLR 2021.

- [12/06/20-12/12/20] 'Attending' NeurIPS. Presenting 'Geometric Dataset Distances via Optimal Transport'.

- [11/18/20] Giving a talk at MSR's Directions in ML series.

- [09/28/20] Paper: Geometric Dataset Distances via Optimal Transport accepted to NeurIPS 2020.

- [09/28/20] Optimal Transport Dataset Distance featured in the MSR blog.

- [01/06/20] Paper: Unsupervised Hierarchy Matching with Optimal Transport over Hyperbolic Spaces accepted to AISTATS 2020.

- [12/10-12/15] At NeurIPS 2019, presenting at the HCML and OTML Workshops

- [10/31/19] New preprint on Interpretability via Weight of Evidence (to be presented at HCML@NeurIPS2019)

- [09/01/19] Reviewer award (among 400 highest-scoring reviewers) at NeurIPS 2019.

- [08/26/19] Started postdoc at MSR

- [07/15/19] Final thesis submission - PhD completed!

- [06/21/19] Succesfully defended my thesis!

- [04/21/19] Paper: Functional Transparency for Structured Data: a Game-Theoretic Approach. accepted to ICML 2019.

- [04/21/19] Paper: Learning Generative Models Across Incomparable Spaces accepted to ICML 2019.

- [04/14-04/19] At AISTATS 2019.

- [12/22/18] Paper: Towards Optimal Transport with Global Invariances accepted to AISTATS 2019.

- [12/21/18] Paper: Towards Robust, Locally Linear Deep Networks accepted to ICLR 2019.

- [12/08/18] Received best paper award in the R2L Workshop at NeurIPS. Congratulations Charlotte!

- [12/02->09/18] At NeurIPS 2018.

- [11/03/18] Talk on Gromov-Wasserstein embedding alignment @ EMNLP.

- [10/30-11/04] At EMNLP 2018.

- [10/30] Our EMNLP paper was covered by MIT NEWS.

- [09/05/18] Paper: Towards Robust Interpretability with Self-Explaining Neural Networks accepted to NIPS 2018.

- [09/03/18] Named one of 218 best reviewers at NIPS 2018. Thanks NIPS for the free registration!

- [08/25-26/18] In Mexico City, for our student-run RIIAA conference.

- [08/24/18] Finished Internship!

- [08/10/18] Paper: Gromov-Wasserstein Alignment of Word Embedding Spaces accepted to EMNLP 2018.

- [07/10-15/18] At ICML 2018.

- [06/25/18] New Preprint: Towards Optimal Transport with Global Invariances.

- [05/29/18] Started Internship at MSR NYC.

- [04/17/18] Guest lecture on Interpretabilty for NLP @ CMU ECE739.

- [04/11/18] Presenting our work on Structured OT @ AISTATS.

- [02/01/18] Named finalist for Facebook PhD Fellowship.

- [01/12/18] Talk at OpenAI on interpretability + optimal transport.

- [11/16/18] Talk at CompLang Seminar @ MIT on interpretability in NLP.

Projects

Publications

Most recent publications on Google Scholar.

To Backtrack or Not to Backtrack: When Sequential Search Limits Model Reasoning

Sunny Qin, David Alvarez-Melis*, Samy Jelassi*, Eran Malach*

COLM'25: Conference on Language Modeling. 2025.

What is the Right Notion of Distance between Predict-then-Optimize Tasks?

Paula Rodriguez-Diaz, Lingkai Kong, Kai Wang, David Alvarez-Melis, Milind Tambe

UAI'25: Uncertainty in Artificial Intelligence. 2025.

What is the Right Notion of Distance between Predict-then-Optimize Tasks? | OpenReview

DDEQs: Distributional Deep Equilibrium Models through Wasserstein Gradient Flows

Jonathan Geuter, Clément Bonet, Anna Korba, David Alvarez-Melis.

AISTATS'25: International Conference on Artificial Intelligence and Statistics . 2025.

Mixture of Parrots: Experts improve memorization more than reasoning

Samy Jelassi, Clara Mohri, David Brandfonbrener, Alex Gu, Nikhil Vyas, Nikhil Anand, David Alvarez-Melis, Yuanzhi Li, Sham M. Kakade, Eran Malach

ICLR'25: International Conference on Learning Representations. 2025.

A Label is Worth A Thousand Images in Dataset Distillation

Tian Qin, Zhiwei Deng, David Alvarez-Melis

NeurIPS'24: Neural Information Processing Systems. 2024.

@inproceedings{NEURIPS2024_ee45939f,

author = {Qin, Tian and Deng, Zhiwei and Alvarez-Melis, David},

booktitle = {Advances in Neural Information Processing Systems},

editor = {A. Globerson and L. Mackey and D. Belgrave and A. Fan and U. Paquet and J. Tomczak and C. Zhang},

pages = {131946--131971},

publisher = {Curran Associates, Inc.},

title = {A Label is Worth A Thousand Images in Dataset Distillation},

url = {https://proceedings.neurips.cc/paper_files/paper/2024/file/ee45939f4403e8abd63a15a29a9c055b-Paper-Conference.pdf},

volume = {37},

year = {2024}

}

Tag-LLM: Repurposing General-Purpose LLMs for Specialized Domains

Junhong Shen, Neil Tenenholtz, James Brian Hall, David Alvarez-Melis, Nicolo Fusi

ICML'24: International Conference on Machine Learning. 2024.

@InProceedings{pmlr-v235-shen24f,

title = {Tag-{LLM}: Repurposing General-Purpose {LLM}s for Specialized Domains},

author = {Shen, Junhong and Tenenholtz, Neil and Hall, James Brian and Alvarez-Melis, David and Fusi, Nicolo},

booktitle = {Proceedings of the 41st International Conference on Machine Learning},

pages = {44759--44773},

year = {2024},

editor = {Salakhutdinov, Ruslan and Kolter, Zico and Heller, Katherine and Weller, Adrian and Oliver, Nuria and Scarlett, Jonathan and Berkenkamp, Felix},

volume = {235},

series = {Proceedings of Machine Learning Research},

month = {21--27 Jul},

publisher = {PMLR},

pdf = {https://raw.githubusercontent.com/mlresearch/v235/main/assets/shen24f/shen24f.pdf},

url = {https://proceedings.mlr.press/v235/shen24f.html},

abstract = {Large Language Models (LLMs) have demonstrated remarkable proficiency in understanding and generating natural language. However, their capabilities wane in highly specialized domains underrepresented in the pretraining corpus, such as physical and biomedical sciences. This work explores how to repurpose general LLMs into effective task solvers for specialized domains. We introduce a novel, model-agnostic framework for learning custom input tags, which are parameterized as continuous vectors appended to the LLM’s embedding layer, to condition the LLM. We design two types of input tags: domain tags are used to delimit specialized representations (e.g., chemical formulas) and provide domain-relevant context; function tags are used to represent specific functions (e.g., predicting molecular properties) and compress function-solving instructions. We develop a three-stage protocol to learn these tags using auxiliary data and domain knowledge. By explicitly disentangling task domains from task functions, our method enables zero-shot generalization to unseen problems through diverse combinations of the input tags. It also boosts LLM’s performance in various specialized domains, such as predicting protein or chemical properties and modeling drug-target interactions, outperforming expert models tailored to these tasks.}

}

Generating Synthetic Datasets by Interpolating along Generalized Geodesics

Jiaojiao Fan, David Alvarez-Melis

UAI'23: Uncertainty in Artificial Intelligence. 2023

@INPROCEEDINGS{fan2023generating,

title = "Generating Synthetic Datasets by Interpolating along

Generalized Geodesics",

booktitle = "Proceedings of the {Thirty-Ninth} Conference on Uncertainty in

Artificial Intelligence",

author = "Fan, Jiaojiao and Alvarez-Melis, David",

publisher = "Proceedings of Machine Learning Research",

year = 2023,

conference = "Uncertainty in Artificial Intelligence"

}

InfoOT: Information Maximizing Optimal Transport

Ching-Yao Chuang, Stefanie Jegelka, David Alvarez-Melis

ICML'23: International Conference on Machine Learning. 2023.

@INPROCEEDINGS{chuang2023infoot,

title = "{InfoOT}: Information Maximizing Optimal Transport",

booktitle = "Proceedings of the 40th International Conference on Machine

Learning",

author = "Chuang, Ching-Yao and Jegelka, Stefanie and Alvarez-Melis,

David",

editor = "Krause, Andreas and Brunskill, Emma and Cho, Kyunghyun and

Engelhardt, Barbara and Sabato, Sivan and Scarlett, Jonathan",

publisher = "PMLR",

volume = 202,

pages = "6228--6242",

series = "Proceedings of Machine Learning Research",

institution = "PMLR",

year = 2023

}

Optimizing Functionals on the Space of Probabilities with Input Convex Neural Networks

David Alvarez-Melis, Yair Schiff, Youssef Mroueh

Transactions of Machine Learning Research (TMLR). 2022.

Earlier version at OTML: NeurIPS'21 Workshop on Optimal Transport in Machine Learning .

From Human Explanation to Model Interpretabilty: A Framework Based on Weight of Evidence

David Alvarez-Melis, Harmanpreet Kaur, Hal Daumé III, Hanna Wallach, Jennifer Wortman Vaughan

HCOMP '21: The 9th AAAI Conference on Human Computation and Crowdsourcing. 2021.

Dataset Dynamics via Gradient Flows in Probability Space

David Alvarez-Melis, Nicolò Fusi

ICML'21: International Conference on Machine Learning. 2021.

@InProceedings{alvarez-melis2021dataset,

title = {Dataset Dynamics via Gradient Flows in Probability Space},

author = {Alvarez-Melis, David and Fusi, Nicol\`o},

booktitle = {Proceedings of the 38th International Conference on Machine Learning},

pages = {219--230},

year = {2021},

editor = {Meila, Marina and Zhang, Tong},

volume = {139},

series = {Proceedings of Machine Learning Research},

month = {18--24 Jul},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v139/alvarez-melis21a/alvarez-melis21a.pdf},

url = {https://proceedings.mlr.press/v139/alvarez-melis21a.html},

abstract = {Various machine learning tasks, from generative modeling to domain adaptation, revolve around the concept of dataset transformation and manipulation. While various methods exist for transforming unlabeled datasets, principled methods to do so for labeled (e.g., classification) datasets are missing. In this work, we propose a novel framework for dataset transformation, which we cast as optimization over data-generating joint probability distributions. We approach this class of problems through Wasserstein gradient flows in probability space, and derive practical and efficient particle-based methods for a flexible but well-behaved class of objective functions. Through various experiments, we show that this framework can be used to impose constraints on classification datasets, adapt them for transfer learning, or to re-purpose fixed or black-box models to classify {—}with high accuracy{—} previously unseen datasets.}

}

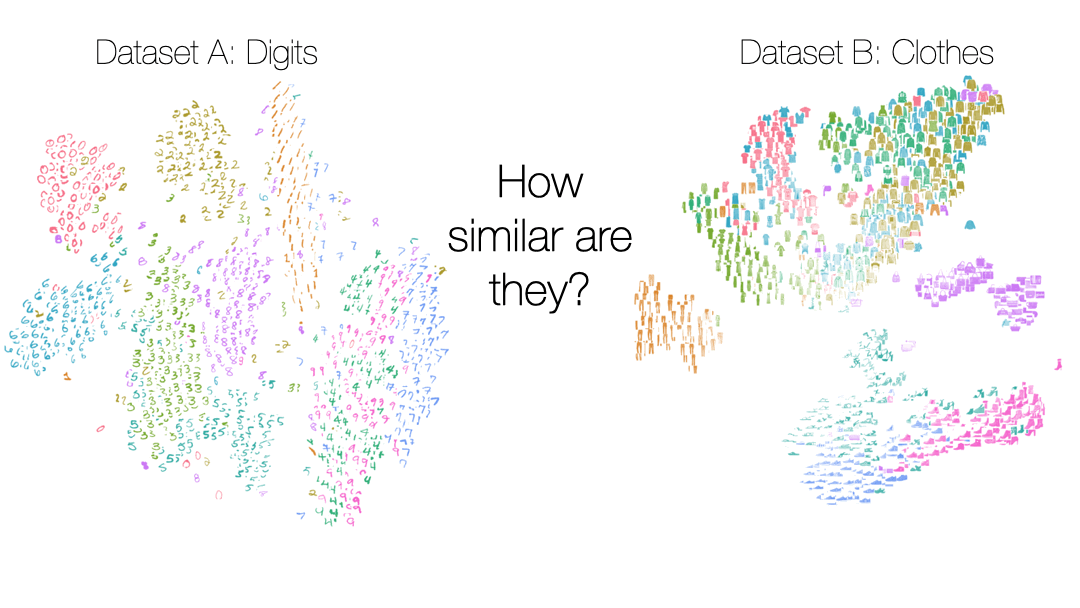

Geometric Dataset Distances via Optimal Transport

David Alvarez-Melis, Nicolò Fusi

NeurIPS'20: Neural Information Processing Systems. 2020.

Earlier version at AutoML @ ICML 2020.

@inproceedings{alvarez-melis2020geometric,

author = {Alvarez-Melis, David and Fusi, Nicolo},

booktitle = {Advances in Neural Information Processing Systems},

editor = {H. Larochelle and M. Ranzato and R. Hadsell and M. F. Balcan and H. Lin},

pages = {21428--21439},

publisher = {Curran Associates, Inc.},

title = {Geometric Dataset Distances via Optimal Transport},

url = {https://proceedings.neurips.cc/paper/2020/file/f52a7b2610fb4d3f74b4106fb80b233d-Paper.pdf},

volume = {33},

year = {2020}

}

Unsupervised Hierarchy Matching with Optimal Transport over Hyperbolic spaces

David Alvarez-Melis, Youssef Mroueh, Tommi S. Jaakkola

AISTATS'20: Artificial Intelligence and Statistics. 2020.

Earlier version at OTML: NeurIPS'18 Workshop on Optimal Transport for Machine Learning . Spotlight.

@InProceedings{alvarez-melis2020unsupervised,

title = {Unsupervised Hierarchy Matching with Optimal Transport over Hyperbolic Spaces},

author = {Alvarez-Melis, David and Mroueh, Youssef and Jaakkola, Tommi},

booktitle = {Proceedings of the Twenty Third International Conference on Artificial Intelligence and Statistics},

pages = {1606--1617},

year = {2020},

editor = {Chiappa, Silvia and Calandra, Roberto},

volume = {108},

series = {Proceedings of Machine Learning Research},

month = {26--28 Aug},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v108/alvarez-melis20a/alvarez-melis20a.pdf},

url = {http://proceedings.mlr.press/v108/alvarez-melis20a.html},

}

Optimal Transport in Structured Domains: Algorithms and Applications

David Alvarez-Melis (advisor: Tommi S. Jaakkola)

PhD Thesis, MIT. 2019.

Learning Generative Models across Incomparable Spaces

Charlotte Bunne, David Alvarez-Melis, Andreas Krause, Stefanie Jegelka

ICML'19: International Conference on Machine Learning.

Earlier version at R2L: NeurIPS'18 Workshop on Relational Representation Learning. Best Paper Award.

@InProceedings{pmlr-v97-bunne19a,

title = {Learning Generative Models across Incomparable Spaces},

author = {Bunne, Charlotte and Alvarez-Melis, David and Krause, Andreas and Jegelka, Stefanie},

booktitle = {Proceedings of the 36th International Conference on Machine Learning},

pages = {851--861},

year = {2019},

editor = {Chaudhuri, Kamalika and Salakhutdinov, Ruslan},

volume = {97},

series = {Proceedings of Machine Learning Research},

address = {Long Beach, California, USA},

month = {09--15 Jun},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v97/bunne19a/bunne19a.pdf},

url = {http://proceedings.mlr.press/v97/bunne19a.html},

abstract = {Generative Adversarial Networks have shown remarkable success in learning a distribution that faithfully recovers a reference distribution in its entirety. However, in some cases, we may want to only learn some aspects (e.g., cluster or manifold structure), while modifying others (e.g., style, orientation or dimension). In this work, we propose an approach to learn generative models across such incomparable spaces, and demonstrate how to steer the learned distribution towards target properties. A key component of our model is the Gromov-Wasserstein distance, a notion of discrepancy that compares distributions relationally rather than absolutely. While this framework subsumes current generative models in identically reproducing distributions, its inherent flexibility allows application to tasks in manifold learning, relational learning and cross-domain learning.}

}

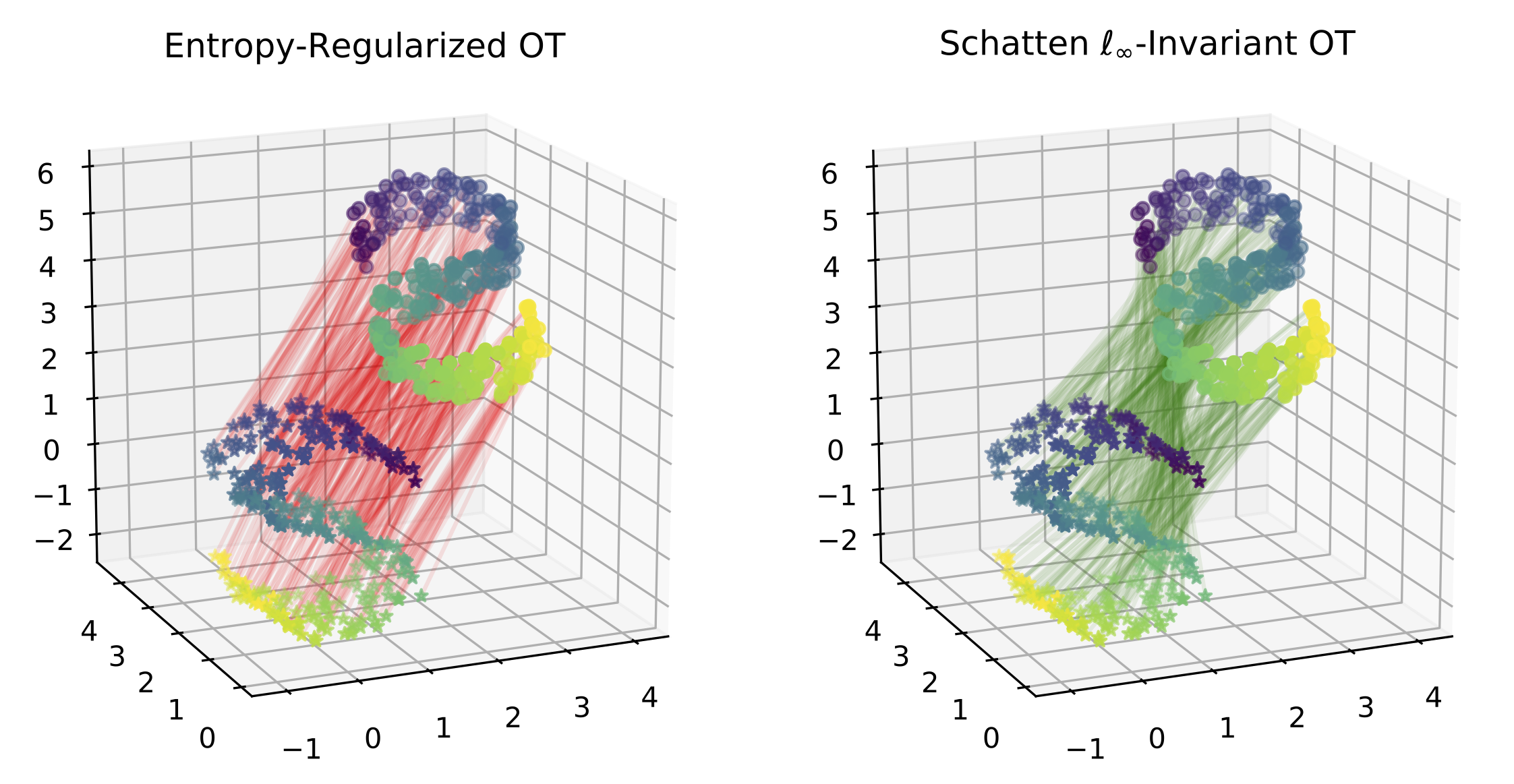

Towards Optimal Transport with Global Invariances

David Alvarez-Melis, Stefanie Jegelka, Tommi S. Jaakkola

AISTATS'19: Artificial Intelligence and Statistics. 2019.

@InProceedings{pmlr-v89-alvarez-melis19a,

title = {Towards Optimal Transport with Global Invariances},

author = {Alvarez-Melis, David and Jegelka, Stefanie and Jaakkola, Tommi S.},

booktitle = {Proceedings of Machine Learning Research},

pages = {1870--1879},

year = {2019},

editor = {Chaudhuri, Kamalika and Sugiyama, Masashi},

volume = {89},

series = {Proceedings of Machine Learning Research},

address = {},

month = {16--18 Apr},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v89/alvarez-melis19a/alvarez-melis19a.pdf},

url = {http://proceedings.mlr.press/v89/alvarez-melis19a.html},

abstract = {Many problems in machine learning involve calculating correspondences between sets of objects, such as point clouds or images. Discrete optimal transport provides a natural and successful approach to such tasks whenever the two sets of objects can be represented in the same space, or at least distances between them can be directly evaluated. Unfortunately neither requirement is likely to hold when object representations are learned from data. Indeed, automatically derived representations such as word embeddings are typically fixed only up to some global transformations, for example, reflection or rotation. As a result, pairwise distances across two such instances are ill-defined without specifying their relative transformation. In this work, we propose a general framework for optimal transport in the presence of latent global transformations. We cast the problem as a joint optimization over transport couplings and transformations chosen from a flexible class of invariances, propose algorithms to solve it, and show promising results in various tasks, including a popular unsupervised word translation benchmark.}

}

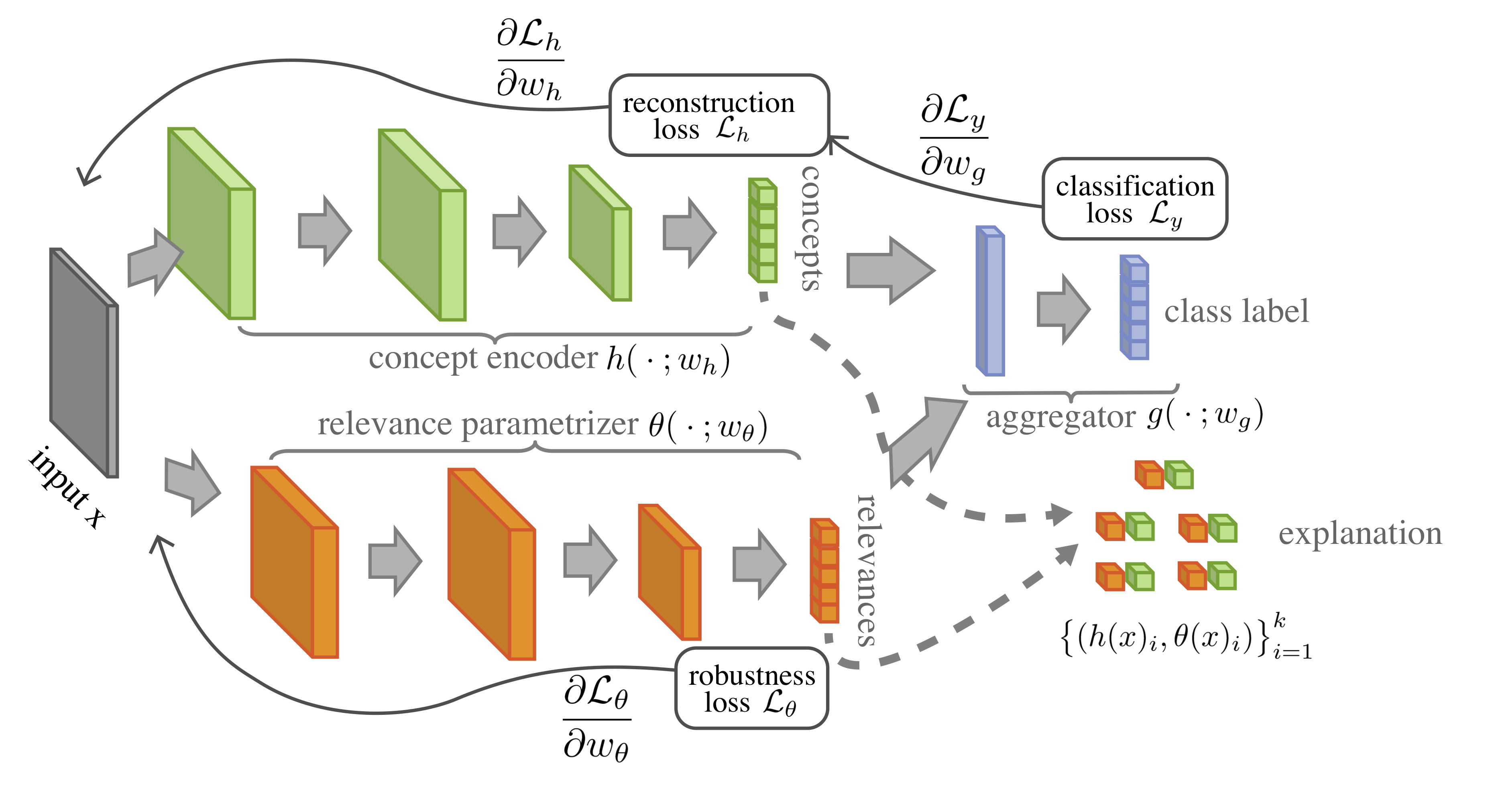

Towards Robust Interpretability with Self-Explaining Neural Networks

David Alvarez-Melis, Tommi S. Jaakkola

NeurIPS'18: Neural Information Processing Systems. 2018.

@incollection{NIPS2018_8003,

title = {Towards Robust Interpretability with Self-Explaining Neural Networks},

author = {Alvarez Melis, David and Jaakkola, Tommi},

booktitle = {Advances in Neural Information Processing Systems 31},

editor = {S. Bengio and H. Wallach and H. Larochelle and K. Grauman and N. Cesa-Bianchi and R. Garnett},

pages = {7786--7795},

year = {2018},

publisher = {Curran Associates, Inc.},

url = {http://papers.nips.cc/paper/8003-towards-robust-interpretability-with-self-explaining-neural-networks.pdf}

}

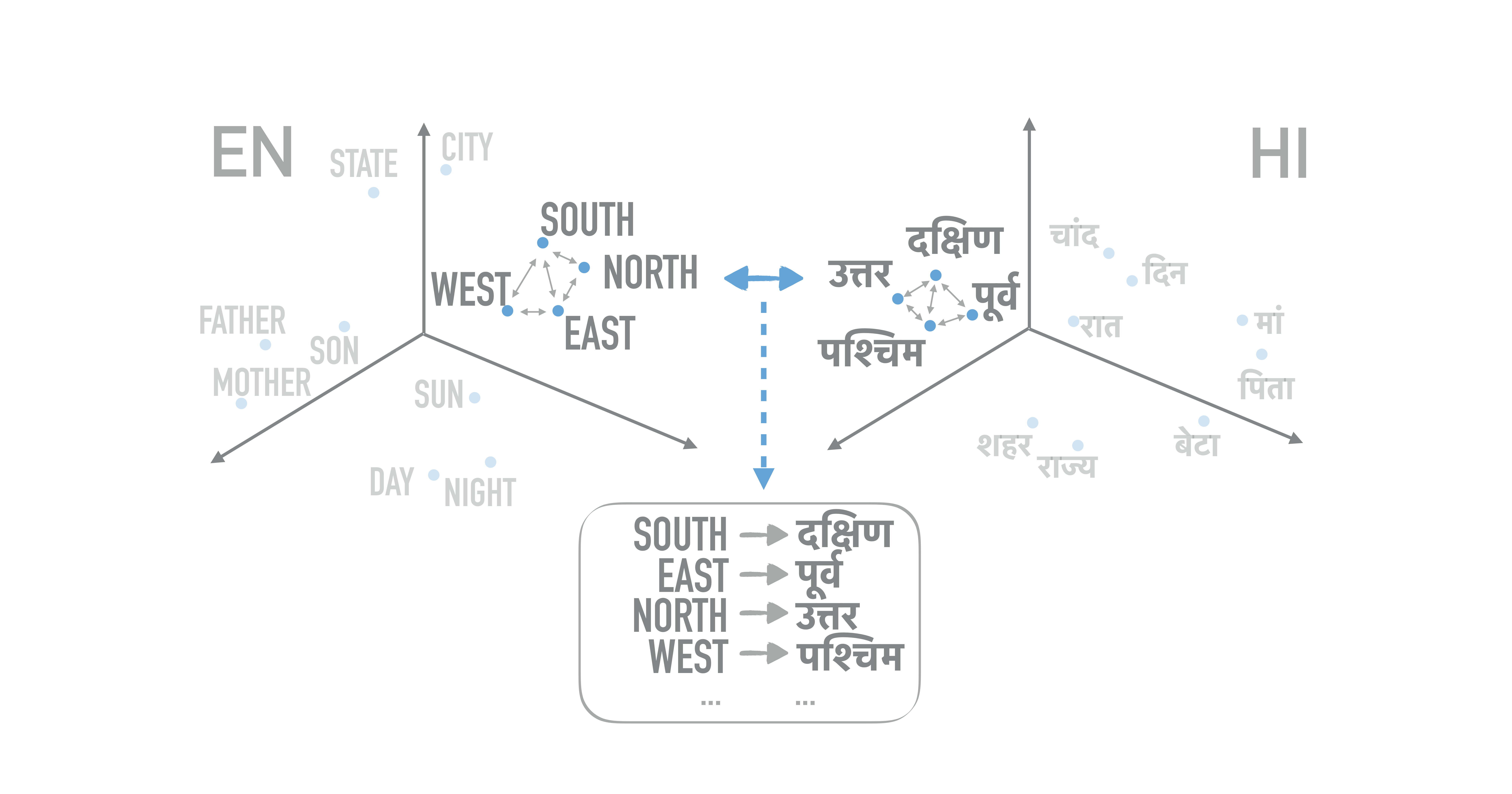

Gromov-Wasserstein Alignment of Word Embedding Spaces

David Alvarez-Melis, Tommi S. Jaakkola

EMNLP'18: Empirical Methods in Natural Language Processing. 2018. Oral Presentation.

@InProceedings{alvarezmelis2018gromov,

author = {Alvarez-Melis, David and Jaakkola, Tommi},

title = {Gromov-Wasserstein Alignment of Word Embedding Spaces},

booktitle = {Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing},

year = {2018},

publisher = {Association for Computational Linguistics},

pages = {1881--1890},

location = {Brussels, Belgium},

url = {http://aclweb.org/anthology/D18-1214}

}

Structured Optimal Transport

David Alvarez-Melis, Tommi S. Jaakkola, Stefanie Jegelka

AISTATS'18: Artificial Intelligence and Statistics. 2018. Oral Presentation.

Earlier version at NIPS Workshop on Optimal Transport for Machine Learning, 2017, as Extended Oral.

@InProceedings{pmlr-v84-alvarez-melis18a,

title = {Structured Optimal Transport},

author = {David Alvarez-Melis and Tommi Jaakkola and Stefanie Jegelka},

booktitle = {Proceedings of the Twenty-First International Conference on Artificial Intelligence and Statistics},

pages = {1771--1780},

year = {2018},

editor = {Amos Storkey and Fernando Perez-Cruz},

volume = {84},

series = {Proceedings of Machine Learning Research},

address = {Playa Blanca, Lanzarote, Canary Islands},

month = {09--11 Apr},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v84/alvarez-melis18a/alvarez-melis18a.pdf},

url = {http://proceedings.mlr.press/v84/alvarez-melis18a.html},

abstract = {Optimal Transport has recently gained interest in machine learning for applications ranging from domain adaptation to sentence similarities or deep learning. Yet, its ability to capture frequently occurring structure beyond the "ground metric" is limited. In this work, we develop a nonlinear generalization of (discrete) optimal transport that is able to reflect much additional structure. We demonstrate how to leverage the geometry of this new model for fast algorithms, and explore connections and properties. Illustrative experiments highlight the benefit of the induced structured couplings for tasks in domain adaptation and natural language processing.}

}

A Causal Framework for Explaining the Predictions of Black-Box Sequence-to-Sequence Models

David Alvarez-Melis, Tommi S. Jaakkola

EMNLP'17: Empirical Methods in Natural Language Processing. 2017.

- MIT News: How Neural Networks think.

@InProceedings{alvarezmelis2017causal,

author = {Alvarez-Melis, David and Jaakkola, Tommi},

title = {A causal framework for explaining the predictions of black-box sequence-to-sequence models},

booktitle = {Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing},

month = {September},

year = {2017},

address = {Copenhagen, Denmark},

publisher = {Association for Computational Linguistics},

pages = {412--421},

url = {https://www.aclweb.org/anthology/D17-1042}

}

Tree-structured Decoding with Doubly-recurrent Neural Networks

David Alvarez-Melis, Tommi S. Jaakkola

ICLR'17: International Conference on Learning Representations. 2017.

@inproceedings{alvarezmelis2017tree,

title={Tree-structured decoding with doubly-recurrent neural networks},

author={Alvarez-Melis, David and Jaakkola, Tommi S},

booktitle = {Proceedings of the International Conference on Learning Representations (ICLR)},

year={2017}

}

Word Embeddings as Metric Recovery in Semantic Spaces

Tatsunori B. Hashimoto, David Alvarez-Melis, Tommi S. Jaakkola

TACL: Transactions of the Association for Computational Linguistics. 2016. (presented at ACL'16).

@article{Hashimoto2016Word,

author = {Hashimoto, Tatsunori and Alvarez-Melis, David and Jaakkola, Tommi },

title = {Word Embeddings as Metric Recovery in Semantic Spaces},

journal = {Transactions of the Association for Computational Linguistics},

volume = {4},

year = {2016},

issn = {2307-387X},

url = {https://transacl.org/ojs/index.php/tacl/article/view/809},

pages = {273--286}

}

Neural Unbalanced Optimal Transport via Cycle-Consistent Semi-Couplings

Frederike Lübeck*, Charlotte Bunne*, Gabriele Gut, Jacobo Sarabia del Castillo, Lucas Pelkmans, David Alvarez-Melis

To Backtrack or Not to Backtrack: When Sequential Search Limits Model Reasoning

Sunny Qin, David Alvarez-Melis*, Samy Jelassi*, Eran Malach*

COLM'25: Conference on Language Modeling. 2025.

What is the Right Notion of Distance between Predict-then-Optimize Tasks?

Paula Rodriguez-Diaz, Lingkai Kong, Kai Wang, David Alvarez-Melis, Milind Tambe

UAI'25: Uncertainty in Artificial Intelligence. 2025.

What is the Right Notion of Distance between Predict-then-Optimize Tasks? | OpenReview

DDEQs: Distributional Deep Equilibrium Models through Wasserstein Gradient Flows

Jonathan Geuter, Clément Bonet, Anna Korba, David Alvarez-Melis.

AISTATS'25: International Conference on Artificial Intelligence and Statistics . 2025.

Mixture of Parrots: Experts improve memorization more than reasoning

Samy Jelassi, Clara Mohri, David Brandfonbrener, Alex Gu, Nikhil Vyas, Nikhil Anand, David Alvarez-Melis, Yuanzhi Li, Sham M. Kakade, Eran Malach

ICLR'25: International Conference on Learning Representations. 2025.

A Label is Worth A Thousand Images in Dataset Distillation

Tian Qin, Zhiwei Deng, David Alvarez-Melis

NeurIPS'24: Neural Information Processing Systems. 2024.

@inproceedings{NEURIPS2024_ee45939f,

author = {Qin, Tian and Deng, Zhiwei and Alvarez-Melis, David},

booktitle = {Advances in Neural Information Processing Systems},

editor = {A. Globerson and L. Mackey and D. Belgrave and A. Fan and U. Paquet and J. Tomczak and C. Zhang},

pages = {131946--131971},

publisher = {Curran Associates, Inc.},

title = {A Label is Worth A Thousand Images in Dataset Distillation},

url = {https://proceedings.neurips.cc/paper_files/paper/2024/file/ee45939f4403e8abd63a15a29a9c055b-Paper-Conference.pdf},

volume = {37},

year = {2024}

}

Tag-LLM: Repurposing General-Purpose LLMs for Specialized Domains

Junhong Shen, Neil Tenenholtz, James Brian Hall, David Alvarez-Melis, Nicolo Fusi

ICML'24: International Conference on Machine Learning. 2024.

@InProceedings{pmlr-v235-shen24f,

title = {Tag-{LLM}: Repurposing General-Purpose {LLM}s for Specialized Domains},

author = {Shen, Junhong and Tenenholtz, Neil and Hall, James Brian and Alvarez-Melis, David and Fusi, Nicolo},

booktitle = {Proceedings of the 41st International Conference on Machine Learning},

pages = {44759--44773},

year = {2024},

editor = {Salakhutdinov, Ruslan and Kolter, Zico and Heller, Katherine and Weller, Adrian and Oliver, Nuria and Scarlett, Jonathan and Berkenkamp, Felix},

volume = {235},

series = {Proceedings of Machine Learning Research},

month = {21--27 Jul},

publisher = {PMLR},

pdf = {https://raw.githubusercontent.com/mlresearch/v235/main/assets/shen24f/shen24f.pdf},

url = {https://proceedings.mlr.press/v235/shen24f.html},

abstract = {Large Language Models (LLMs) have demonstrated remarkable proficiency in understanding and generating natural language. However, their capabilities wane in highly specialized domains underrepresented in the pretraining corpus, such as physical and biomedical sciences. This work explores how to repurpose general LLMs into effective task solvers for specialized domains. We introduce a novel, model-agnostic framework for learning custom input tags, which are parameterized as continuous vectors appended to the LLM’s embedding layer, to condition the LLM. We design two types of input tags: domain tags are used to delimit specialized representations (e.g., chemical formulas) and provide domain-relevant context; function tags are used to represent specific functions (e.g., predicting molecular properties) and compress function-solving instructions. We develop a three-stage protocol to learn these tags using auxiliary data and domain knowledge. By explicitly disentangling task domains from task functions, our method enables zero-shot generalization to unseen problems through diverse combinations of the input tags. It also boosts LLM’s performance in various specialized domains, such as predicting protein or chemical properties and modeling drug-target interactions, outperforming expert models tailored to these tasks.}

}

Generating Synthetic Datasets by Interpolating along Generalized Geodesics

Jiaojiao Fan, David Alvarez-Melis

UAI'23: Uncertainty in Artificial Intelligence. 2023

@INPROCEEDINGS{fan2023generating,

title = "Generating Synthetic Datasets by Interpolating along

Generalized Geodesics",

booktitle = "Proceedings of the {Thirty-Ninth} Conference on Uncertainty in

Artificial Intelligence",

author = "Fan, Jiaojiao and Alvarez-Melis, David",

publisher = "Proceedings of Machine Learning Research",

year = 2023,

conference = "Uncertainty in Artificial Intelligence"

}

InfoOT: Information Maximizing Optimal Transport

Ching-Yao Chuang, Stefanie Jegelka, David Alvarez-Melis

ICML'23: International Conference on Machine Learning. 2023.

@INPROCEEDINGS{chuang2023infoot,

title = "{InfoOT}: Information Maximizing Optimal Transport",

booktitle = "Proceedings of the 40th International Conference on Machine

Learning",

author = "Chuang, Ching-Yao and Jegelka, Stefanie and Alvarez-Melis,

David",

editor = "Krause, Andreas and Brunskill, Emma and Cho, Kyunghyun and

Engelhardt, Barbara and Sabato, Sivan and Scarlett, Jonathan",

publisher = "PMLR",

volume = 202,

pages = "6228--6242",

series = "Proceedings of Machine Learning Research",

institution = "PMLR",

year = 2023

}

Domain Adaptation using Optimal Transport for Invariant Larning using Histopathology datasets

Kianoush Falahkheirkhah, Alex Lu, David Alvarez-Melis, and Grace Huynh

Medical Imaging in Deep Learning (MIDL). 2023.

Are GANs overkill for NLP?

David Alvarez-Melis*, Vikas Garg*, Adam Tauman Kalai*

NeurIPS 2022 (forthcoming)

Hierarchical Optimal Transport for Comparing Histopathology Datasets

Anna Yeaton, Rahul G. Krishnan, Rebecca Mieloszyk, David Alvarez-Melis, Grace Huynh

Medical Imaging in Deep Learning (MIDL). 2022.

Interpretable Distribution Shift Detection using Optimal Transport

Neha Hulkund, Nicolo Fusi, Jennifer Wortman Vaughan, David Alvarez-Melis

DataPerf Workshop at ICML 2022

Optimizing Functionals on the Space of Probabilities with Input Convex Neural Networks

David Alvarez-Melis, Yair Schiff, Youssef Mroueh

Transactions of Machine Learning Research (TMLR). 2022.

Earlier version at OTML: NeurIPS'21 Workshop on Optimal Transport in Machine Learning .

Dataset Dynamics via Gradient Flows in Probability Space

David Alvarez-Melis, Nicolò Fusi

ICML'21: International Conference on Machine Learning. 2021.

@InProceedings{alvarez-melis2021dataset,

title = {Dataset Dynamics via Gradient Flows in Probability Space},

author = {Alvarez-Melis, David and Fusi, Nicol\`o},

booktitle = {Proceedings of the 38th International Conference on Machine Learning},

pages = {219--230},

year = {2021},

editor = {Meila, Marina and Zhang, Tong},

volume = {139},

series = {Proceedings of Machine Learning Research},

month = {18--24 Jul},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v139/alvarez-melis21a/alvarez-melis21a.pdf},

url = {https://proceedings.mlr.press/v139/alvarez-melis21a.html},

abstract = {Various machine learning tasks, from generative modeling to domain adaptation, revolve around the concept of dataset transformation and manipulation. While various methods exist for transforming unlabeled datasets, principled methods to do so for labeled (e.g., classification) datasets are missing. In this work, we propose a novel framework for dataset transformation, which we cast as optimization over data-generating joint probability distributions. We approach this class of problems through Wasserstein gradient flows in probability space, and derive practical and efficient particle-based methods for a flexible but well-behaved class of objective functions. Through various experiments, we show that this framework can be used to impose constraints on classification datasets, adapt them for transfer learning, or to re-purpose fixed or black-box models to classify {—}with high accuracy{—} previously unseen datasets.}

}

Geometric Dataset Distances via Optimal Transport

David Alvarez-Melis, Nicolò Fusi

NeurIPS'20: Neural Information Processing Systems. 2020.

Earlier version at AutoML @ ICML 2020.

@inproceedings{alvarez-melis2020geometric,

author = {Alvarez-Melis, David and Fusi, Nicolo},

booktitle = {Advances in Neural Information Processing Systems},

editor = {H. Larochelle and M. Ranzato and R. Hadsell and M. F. Balcan and H. Lin},

pages = {21428--21439},

publisher = {Curran Associates, Inc.},

title = {Geometric Dataset Distances via Optimal Transport},

url = {https://proceedings.neurips.cc/paper/2020/file/f52a7b2610fb4d3f74b4106fb80b233d-Paper.pdf},

volume = {33},

year = {2020}

}

Unsupervised Hierarchy Matching with Optimal Transport over Hyperbolic spaces

David Alvarez-Melis, Youssef Mroueh, Tommi S. Jaakkola

AISTATS'20: Artificial Intelligence and Statistics. 2020.

Earlier version at OTML: NeurIPS'18 Workshop on Optimal Transport for Machine Learning . Spotlight.

@InProceedings{alvarez-melis2020unsupervised,

title = {Unsupervised Hierarchy Matching with Optimal Transport over Hyperbolic Spaces},

author = {Alvarez-Melis, David and Mroueh, Youssef and Jaakkola, Tommi},

booktitle = {Proceedings of the Twenty Third International Conference on Artificial Intelligence and Statistics},

pages = {1606--1617},

year = {2020},

editor = {Chiappa, Silvia and Calandra, Roberto},

volume = {108},

series = {Proceedings of Machine Learning Research},

month = {26--28 Aug},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v108/alvarez-melis20a/alvarez-melis20a.pdf},

url = {http://proceedings.mlr.press/v108/alvarez-melis20a.html},

}

Probabilistic Bias Mitigation in Word Embeddings

Hailey James-Sorenson, David Alvarez-Melis

HCML @ NeurIPS2019

Optimal Transport in Structured Domains: Algorithms and Applications

David Alvarez-Melis (advisor: Tommi S. Jaakkola)

PhD Thesis, MIT. 2019.

Functional Transparency for Structured Data: a Game-Theoretic Approach,

Guang-He Lee, Wengong Jin, David Alvarez-Melis, Tommi S. Jaakkola

ICML'19: International Conference on Machine Learning.

@InProceedings{pmlr-v97-lee19b,

title = {Functional Transparency for Structured Data: a Game-Theoretic Approach},

author = {Lee, Guang-He and Jin, Wengong and Alvarez-Melis, David and Jaakkola, Tommi},

booktitle = {Proceedings of the 36th International Conference on Machine Learning},

pages = {3723--3733},

year = {2019},

editor = {Chaudhuri, Kamalika and Salakhutdinov, Ruslan},

volume = {97},

series = {Proceedings of Machine Learning Research},

address = {Long Beach, California, USA},

month = {09--15 Jun},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v97/lee19b/lee19b.pdf},

url = {http://proceedings.mlr.press/v97/lee19b.html},

abstract = {We provide a new approach to training neural models to exhibit transparency in a well-defined, functional manner. Our approach naturally operates over structured data and tailors the predictor, functionally, towards a chosen family of (local) witnesses. The estimation problem is setup as a co-operative game between an unrestricted \emph{predictor} such as a neural network, and a set of \emph{witnesses} chosen from the desired transparent family. The goal of the witnesses is to highlight, locally, how well the predictor conforms to the chosen family of functions, while the predictor is trained to minimize the highlighted discrepancy. We emphasize that the predictor remains globally powerful as it is only encouraged to agree locally with locally adapted witnesses. We analyze the effect of the proposed approach, provide example formulations in the context of deep graph and sequence models, and empirically illustrate the idea in chemical property prediction, temporal modeling, and molecule representation learning.}

}

Learning Generative Models across Incomparable Spaces

Charlotte Bunne, David Alvarez-Melis, Andreas Krause, Stefanie Jegelka

ICML'19: International Conference on Machine Learning.

Earlier version at R2L: NeurIPS'18 Workshop on Relational Representation Learning. Best Paper Award.

@InProceedings{pmlr-v97-bunne19a,

title = {Learning Generative Models across Incomparable Spaces},

author = {Bunne, Charlotte and Alvarez-Melis, David and Krause, Andreas and Jegelka, Stefanie},

booktitle = {Proceedings of the 36th International Conference on Machine Learning},

pages = {851--861},

year = {2019},

editor = {Chaudhuri, Kamalika and Salakhutdinov, Ruslan},

volume = {97},

series = {Proceedings of Machine Learning Research},

address = {Long Beach, California, USA},

month = {09--15 Jun},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v97/bunne19a/bunne19a.pdf},

url = {http://proceedings.mlr.press/v97/bunne19a.html},

abstract = {Generative Adversarial Networks have shown remarkable success in learning a distribution that faithfully recovers a reference distribution in its entirety. However, in some cases, we may want to only learn some aspects (e.g., cluster or manifold structure), while modifying others (e.g., style, orientation or dimension). In this work, we propose an approach to learn generative models across such incomparable spaces, and demonstrate how to steer the learned distribution towards target properties. A key component of our model is the Gromov-Wasserstein distance, a notion of discrepancy that compares distributions relationally rather than absolutely. While this framework subsumes current generative models in identically reproducing distributions, its inherent flexibility allows application to tasks in manifold learning, relational learning and cross-domain learning.}

}

Towards Robust, Locally Linear Deep Networks

Guang-He Lee, David Alvarez-Melis, Tommi S. Jaakkola

ICLR'19: International Conference on Learning Representations. 2019.

Towards Optimal Transport with Global Invariances

David Alvarez-Melis, Stefanie Jegelka, Tommi S. Jaakkola

AISTATS'19: Artificial Intelligence and Statistics. 2019.

@InProceedings{pmlr-v89-alvarez-melis19a,

title = {Towards Optimal Transport with Global Invariances},

author = {Alvarez-Melis, David and Jegelka, Stefanie and Jaakkola, Tommi S.},

booktitle = {Proceedings of Machine Learning Research},

pages = {1870--1879},

year = {2019},

editor = {Chaudhuri, Kamalika and Sugiyama, Masashi},

volume = {89},

series = {Proceedings of Machine Learning Research},

address = {},

month = {16--18 Apr},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v89/alvarez-melis19a/alvarez-melis19a.pdf},

url = {http://proceedings.mlr.press/v89/alvarez-melis19a.html},

abstract = {Many problems in machine learning involve calculating correspondences between sets of objects, such as point clouds or images. Discrete optimal transport provides a natural and successful approach to such tasks whenever the two sets of objects can be represented in the same space, or at least distances between them can be directly evaluated. Unfortunately neither requirement is likely to hold when object representations are learned from data. Indeed, automatically derived representations such as word embeddings are typically fixed only up to some global transformations, for example, reflection or rotation. As a result, pairwise distances across two such instances are ill-defined without specifying their relative transformation. In this work, we propose a general framework for optimal transport in the presence of latent global transformations. We cast the problem as a joint optimization over transport couplings and transformations chosen from a flexible class of invariances, propose algorithms to solve it, and show promising results in various tasks, including a popular unsupervised word translation benchmark.}

}

Towards Robust Interpretability with Self-Explaining Neural Networks

David Alvarez-Melis, Tommi S. Jaakkola

NeurIPS'18: Neural Information Processing Systems. 2018.

@incollection{NIPS2018_8003,

title = {Towards Robust Interpretability with Self-Explaining Neural Networks},

author = {Alvarez Melis, David and Jaakkola, Tommi},

booktitle = {Advances in Neural Information Processing Systems 31},

editor = {S. Bengio and H. Wallach and H. Larochelle and K. Grauman and N. Cesa-Bianchi and R. Garnett},

pages = {7786--7795},

year = {2018},

publisher = {Curran Associates, Inc.},

url = {http://papers.nips.cc/paper/8003-towards-robust-interpretability-with-self-explaining-neural-networks.pdf}

}

Gromov-Wasserstein Alignment of Word Embedding Spaces

David Alvarez-Melis, Tommi S. Jaakkola

EMNLP'18: Empirical Methods in Natural Language Processing. 2018. Oral Presentation.

@InProceedings{alvarezmelis2018gromov,

author = {Alvarez-Melis, David and Jaakkola, Tommi},

title = {Gromov-Wasserstein Alignment of Word Embedding Spaces},

booktitle = {Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing},

year = {2018},

publisher = {Association for Computational Linguistics},

pages = {1881--1890},

location = {Brussels, Belgium},

url = {http://aclweb.org/anthology/D18-1214}

}

Game-theoretic Interpretability for Temporal Modeling

Guang-He Lee, David Alvarez-Melis, Tommi S. Jaakkola

Fairness, Accountability, and Transparency in Machine Learning (@ICML 2018).

On the Robustness of Interpretability Methods

David Alvarez-Melis, Tommi S. Jaakkola

Workshop on Human Interpretability in Machine Learning (@ICML 2018).

Structured Optimal Transport

David Alvarez-Melis, Tommi S. Jaakkola, Stefanie Jegelka

AISTATS'18: Artificial Intelligence and Statistics. 2018. Oral Presentation.

Earlier version at NIPS Workshop on Optimal Transport for Machine Learning, 2017, as Extended Oral.

@InProceedings{pmlr-v84-alvarez-melis18a,

title = {Structured Optimal Transport},

author = {David Alvarez-Melis and Tommi Jaakkola and Stefanie Jegelka},

booktitle = {Proceedings of the Twenty-First International Conference on Artificial Intelligence and Statistics},

pages = {1771--1780},

year = {2018},

editor = {Amos Storkey and Fernando Perez-Cruz},

volume = {84},

series = {Proceedings of Machine Learning Research},

address = {Playa Blanca, Lanzarote, Canary Islands},

month = {09--11 Apr},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v84/alvarez-melis18a/alvarez-melis18a.pdf},

url = {http://proceedings.mlr.press/v84/alvarez-melis18a.html},

abstract = {Optimal Transport has recently gained interest in machine learning for applications ranging from domain adaptation to sentence similarities or deep learning. Yet, its ability to capture frequently occurring structure beyond the "ground metric" is limited. In this work, we develop a nonlinear generalization of (discrete) optimal transport that is able to reflect much additional structure. We demonstrate how to leverage the geometry of this new model for fast algorithms, and explore connections and properties. Illustrative experiments highlight the benefit of the induced structured couplings for tasks in domain adaptation and natural language processing.}

}

The Emotional GAN: Priming Adversarial Generation of Art with Emotion.

David Alvarez-Melis, Judith Amores

NIPS Workshop on Machine Learning for Creativity and Design. 2017.

Distributional Adversarial Networks

Chengtao Li*, David Alvarez-Melis*, Keyulu Xu, Stefanie Jegelka, Suvrit Sra

ICLR'17: International Conference on Learning Representations (Workshop track). 2017.

A Causal Framework for Explaining the Predictions of Black-Box Sequence-to-Sequence Models

David Alvarez-Melis, Tommi S. Jaakkola

EMNLP'17: Empirical Methods in Natural Language Processing. 2017.

- MIT News: How Neural Networks think.

@InProceedings{alvarezmelis2017causal,

author = {Alvarez-Melis, David and Jaakkola, Tommi},

title = {A causal framework for explaining the predictions of black-box sequence-to-sequence models},

booktitle = {Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing},

month = {September},

year = {2017},

address = {Copenhagen, Denmark},

publisher = {Association for Computational Linguistics},

pages = {412--421},

url = {https://www.aclweb.org/anthology/D17-1042}

}

Tree-structured Decoding with Doubly-recurrent Neural Networks

David Alvarez-Melis, Tommi S. Jaakkola

ICLR'17: International Conference on Learning Representations. 2017.

@inproceedings{alvarezmelis2017tree,

title={Tree-structured decoding with doubly-recurrent neural networks},

author={Alvarez-Melis, David and Jaakkola, Tommi S},

booktitle = {Proceedings of the International Conference on Learning Representations (ICLR)},

year={2017}

}

Topic Modeling in Twitter: Aggregating Tweets by Conversations

David Alvarez-Melis*, Martin Saveski*

ICWSM'16: International AAAI Conference on Web and Social Media. 2016. (Short Paper)

@inproceedings{alvarezmelis2016toic,

author = {David Alvarez{-}Melis and Martin Saveski},

title = {Topic Modeling in Twitter: Aggregating Tweets by Conversations},

booktitle = {Proceedings of the Tenth International Conference on Web and Social

Media (ICWSM)},

pages = {519--522},

year = {2016},

url = {http://www.aaai.org/ocs/index.php/ICWSM/ICWSM16/paper/view/13162},

}

Word, graph and manifold embedding from Markov processes

Tatsunori B. Hashimoto, David Alvarez-Melis, Tommi S. Jaakkola

NIPS 2015 Workshop on Nonparametric Methods for Large Scale Representation Learning. Oral presentation.

A translation of 'The characteristic function of a random phenomenon' by Bruno de Finetti

David Alvarez-Melis, Tamara Broderick

Translation. 2015

The Matrix Multiplicative Weights Algorithm for Domain Adaptation

David Alvarez-Melis (advisor: Mehryar Mohri)

MS Thesis, Courant Institute. 2013.

Are GANs overkill for NLP?

David Alvarez-Melis*, Vikas Garg*, Adam Tauman Kalai*

NeurIPS 2022 (forthcoming)

Probabilistic Bias Mitigation in Word Embeddings

Hailey James-Sorenson, David Alvarez-Melis

HCML @ NeurIPS2019

Gromov-Wasserstein Alignment of Word Embedding Spaces

David Alvarez-Melis, Tommi S. Jaakkola

EMNLP'18: Empirical Methods in Natural Language Processing. 2018. Oral Presentation.

@InProceedings{alvarezmelis2018gromov,

author = {Alvarez-Melis, David and Jaakkola, Tommi},

title = {Gromov-Wasserstein Alignment of Word Embedding Spaces},

booktitle = {Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing},

year = {2018},

publisher = {Association for Computational Linguistics},

pages = {1881--1890},

location = {Brussels, Belgium},

url = {http://aclweb.org/anthology/D18-1214}

}

A Causal Framework for Explaining the Predictions of Black-Box Sequence-to-Sequence Models

David Alvarez-Melis, Tommi S. Jaakkola

EMNLP'17: Empirical Methods in Natural Language Processing. 2017.

- MIT News: How Neural Networks think.

@InProceedings{alvarezmelis2017causal,

author = {Alvarez-Melis, David and Jaakkola, Tommi},

title = {A causal framework for explaining the predictions of black-box sequence-to-sequence models},

booktitle = {Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing},

month = {September},

year = {2017},

address = {Copenhagen, Denmark},

publisher = {Association for Computational Linguistics},

pages = {412--421},

url = {https://www.aclweb.org/anthology/D17-1042}

}

Tree-structured Decoding with Doubly-recurrent Neural Networks

David Alvarez-Melis, Tommi S. Jaakkola

ICLR'17: International Conference on Learning Representations. 2017.

@inproceedings{alvarezmelis2017tree,

title={Tree-structured decoding with doubly-recurrent neural networks},

author={Alvarez-Melis, David and Jaakkola, Tommi S},

booktitle = {Proceedings of the International Conference on Learning Representations (ICLR)},

year={2017}

}

Word Embeddings as Metric Recovery in Semantic Spaces

Tatsunori B. Hashimoto, David Alvarez-Melis, Tommi S. Jaakkola

TACL: Transactions of the Association for Computational Linguistics. 2016. (presented at ACL'16).

@article{Hashimoto2016Word,

author = {Hashimoto, Tatsunori and Alvarez-Melis, David and Jaakkola, Tommi },

title = {Word Embeddings as Metric Recovery in Semantic Spaces},

journal = {Transactions of the Association for Computational Linguistics},

volume = {4},

year = {2016},

issn = {2307-387X},

url = {https://transacl.org/ojs/index.php/tacl/article/view/809},

pages = {273--286}

}

Word, graph and manifold embedding from Markov processes

Tatsunori B. Hashimoto, David Alvarez-Melis, Tommi S. Jaakkola

NIPS 2015 Workshop on Nonparametric Methods for Large Scale Representation Learning. Oral presentation.

To Backtrack or Not to Backtrack: When Sequential Search Limits Model Reasoning

Sunny Qin, David Alvarez-Melis*, Samy Jelassi*, Eran Malach*

COLM'25: Conference on Language Modeling. 2025.

What is the Right Notion of Distance between Predict-then-Optimize Tasks?

Paula Rodriguez-Diaz, Lingkai Kong, Kai Wang, David Alvarez-Melis, Milind Tambe

UAI'25: Uncertainty in Artificial Intelligence. 2025.

What is the Right Notion of Distance between Predict-then-Optimize Tasks? | OpenReview

Generating Synthetic Datasets by Interpolating along Generalized Geodesics

Jiaojiao Fan, David Alvarez-Melis

UAI'23: Uncertainty in Artificial Intelligence. 2023

@INPROCEEDINGS{fan2023generating,

title = "Generating Synthetic Datasets by Interpolating along

Generalized Geodesics",

booktitle = "Proceedings of the {Thirty-Ninth} Conference on Uncertainty in

Artificial Intelligence",

author = "Fan, Jiaojiao and Alvarez-Melis, David",

publisher = "Proceedings of Machine Learning Research",

year = 2023,

conference = "Uncertainty in Artificial Intelligence"

}

InfoOT: Information Maximizing Optimal Transport

Ching-Yao Chuang, Stefanie Jegelka, David Alvarez-Melis

ICML'23: International Conference on Machine Learning. 2023.

@INPROCEEDINGS{chuang2023infoot,

title = "{InfoOT}: Information Maximizing Optimal Transport",

booktitle = "Proceedings of the 40th International Conference on Machine

Learning",

author = "Chuang, Ching-Yao and Jegelka, Stefanie and Alvarez-Melis,

David",

editor = "Krause, Andreas and Brunskill, Emma and Cho, Kyunghyun and

Engelhardt, Barbara and Sabato, Sivan and Scarlett, Jonathan",

publisher = "PMLR",

volume = 202,

pages = "6228--6242",

series = "Proceedings of Machine Learning Research",

institution = "PMLR",

year = 2023

}

Domain Adaptation using Optimal Transport for Invariant Larning using Histopathology datasets

Kianoush Falahkheirkhah, Alex Lu, David Alvarez-Melis, and Grace Huynh

Medical Imaging in Deep Learning (MIDL). 2023.

Neural Unbalanced Optimal Transport via Cycle-Consistent Semi-Couplings

Frederike Lübeck*, Charlotte Bunne*, Gabriele Gut, Jacobo Sarabia del Castillo, Lucas Pelkmans, David Alvarez-Melis

Hierarchical Optimal Transport for Comparing Histopathology Datasets

Anna Yeaton, Rahul G. Krishnan, Rebecca Mieloszyk, David Alvarez-Melis, Grace Huynh

Medical Imaging in Deep Learning (MIDL). 2022.

Interpretable Distribution Shift Detection using Optimal Transport

Neha Hulkund, Nicolo Fusi, Jennifer Wortman Vaughan, David Alvarez-Melis

DataPerf Workshop at ICML 2022

Optimizing Functionals on the Space of Probabilities with Input Convex Neural Networks

David Alvarez-Melis, Yair Schiff, Youssef Mroueh

Transactions of Machine Learning Research (TMLR). 2022.

Earlier version at OTML: NeurIPS'21 Workshop on Optimal Transport in Machine Learning .

Dataset Dynamics via Gradient Flows in Probability Space

David Alvarez-Melis, Nicolò Fusi

ICML'21: International Conference on Machine Learning. 2021.

@InProceedings{alvarez-melis2021dataset,

title = {Dataset Dynamics via Gradient Flows in Probability Space},

author = {Alvarez-Melis, David and Fusi, Nicol\`o},

booktitle = {Proceedings of the 38th International Conference on Machine Learning},

pages = {219--230},

year = {2021},

editor = {Meila, Marina and Zhang, Tong},

volume = {139},

series = {Proceedings of Machine Learning Research},

month = {18--24 Jul},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v139/alvarez-melis21a/alvarez-melis21a.pdf},

url = {https://proceedings.mlr.press/v139/alvarez-melis21a.html},

abstract = {Various machine learning tasks, from generative modeling to domain adaptation, revolve around the concept of dataset transformation and manipulation. While various methods exist for transforming unlabeled datasets, principled methods to do so for labeled (e.g., classification) datasets are missing. In this work, we propose a novel framework for dataset transformation, which we cast as optimization over data-generating joint probability distributions. We approach this class of problems through Wasserstein gradient flows in probability space, and derive practical and efficient particle-based methods for a flexible but well-behaved class of objective functions. Through various experiments, we show that this framework can be used to impose constraints on classification datasets, adapt them for transfer learning, or to re-purpose fixed or black-box models to classify {—}with high accuracy{—} previously unseen datasets.}

}

Geometric Dataset Distances via Optimal Transport

David Alvarez-Melis, Nicolò Fusi

NeurIPS'20: Neural Information Processing Systems. 2020.

Earlier version at AutoML @ ICML 2020.

@inproceedings{alvarez-melis2020geometric,

author = {Alvarez-Melis, David and Fusi, Nicolo},

booktitle = {Advances in Neural Information Processing Systems},

editor = {H. Larochelle and M. Ranzato and R. Hadsell and M. F. Balcan and H. Lin},

pages = {21428--21439},

publisher = {Curran Associates, Inc.},

title = {Geometric Dataset Distances via Optimal Transport},

url = {https://proceedings.neurips.cc/paper/2020/file/f52a7b2610fb4d3f74b4106fb80b233d-Paper.pdf},

volume = {33},

year = {2020}

}

Unsupervised Hierarchy Matching with Optimal Transport over Hyperbolic spaces

David Alvarez-Melis, Youssef Mroueh, Tommi S. Jaakkola

AISTATS'20: Artificial Intelligence and Statistics. 2020.

Earlier version at OTML: NeurIPS'18 Workshop on Optimal Transport for Machine Learning . Spotlight.

@InProceedings{alvarez-melis2020unsupervised,

title = {Unsupervised Hierarchy Matching with Optimal Transport over Hyperbolic Spaces},

author = {Alvarez-Melis, David and Mroueh, Youssef and Jaakkola, Tommi},

booktitle = {Proceedings of the Twenty Third International Conference on Artificial Intelligence and Statistics},

pages = {1606--1617},

year = {2020},

editor = {Chiappa, Silvia and Calandra, Roberto},

volume = {108},

series = {Proceedings of Machine Learning Research},

month = {26--28 Aug},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v108/alvarez-melis20a/alvarez-melis20a.pdf},

url = {http://proceedings.mlr.press/v108/alvarez-melis20a.html},

}

Optimal Transport in Structured Domains: Algorithms and Applications

David Alvarez-Melis (advisor: Tommi S. Jaakkola)

PhD Thesis, MIT. 2019.

Learning Generative Models across Incomparable Spaces

Charlotte Bunne, David Alvarez-Melis, Andreas Krause, Stefanie Jegelka

ICML'19: International Conference on Machine Learning.

Earlier version at R2L: NeurIPS'18 Workshop on Relational Representation Learning. Best Paper Award.

@InProceedings{pmlr-v97-bunne19a,

title = {Learning Generative Models across Incomparable Spaces},

author = {Bunne, Charlotte and Alvarez-Melis, David and Krause, Andreas and Jegelka, Stefanie},

booktitle = {Proceedings of the 36th International Conference on Machine Learning},

pages = {851--861},

year = {2019},

editor = {Chaudhuri, Kamalika and Salakhutdinov, Ruslan},

volume = {97},

series = {Proceedings of Machine Learning Research},

address = {Long Beach, California, USA},

month = {09--15 Jun},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v97/bunne19a/bunne19a.pdf},

url = {http://proceedings.mlr.press/v97/bunne19a.html},

abstract = {Generative Adversarial Networks have shown remarkable success in learning a distribution that faithfully recovers a reference distribution in its entirety. However, in some cases, we may want to only learn some aspects (e.g., cluster or manifold structure), while modifying others (e.g., style, orientation or dimension). In this work, we propose an approach to learn generative models across such incomparable spaces, and demonstrate how to steer the learned distribution towards target properties. A key component of our model is the Gromov-Wasserstein distance, a notion of discrepancy that compares distributions relationally rather than absolutely. While this framework subsumes current generative models in identically reproducing distributions, its inherent flexibility allows application to tasks in manifold learning, relational learning and cross-domain learning.}

}

Towards Optimal Transport with Global Invariances

David Alvarez-Melis, Stefanie Jegelka, Tommi S. Jaakkola

AISTATS'19: Artificial Intelligence and Statistics. 2019.

@InProceedings{pmlr-v89-alvarez-melis19a,

title = {Towards Optimal Transport with Global Invariances},

author = {Alvarez-Melis, David and Jegelka, Stefanie and Jaakkola, Tommi S.},

booktitle = {Proceedings of Machine Learning Research},

pages = {1870--1879},

year = {2019},

editor = {Chaudhuri, Kamalika and Sugiyama, Masashi},

volume = {89},

series = {Proceedings of Machine Learning Research},

address = {},

month = {16--18 Apr},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v89/alvarez-melis19a/alvarez-melis19a.pdf},

url = {http://proceedings.mlr.press/v89/alvarez-melis19a.html},

abstract = {Many problems in machine learning involve calculating correspondences between sets of objects, such as point clouds or images. Discrete optimal transport provides a natural and successful approach to such tasks whenever the two sets of objects can be represented in the same space, or at least distances between them can be directly evaluated. Unfortunately neither requirement is likely to hold when object representations are learned from data. Indeed, automatically derived representations such as word embeddings are typically fixed only up to some global transformations, for example, reflection or rotation. As a result, pairwise distances across two such instances are ill-defined without specifying their relative transformation. In this work, we propose a general framework for optimal transport in the presence of latent global transformations. We cast the problem as a joint optimization over transport couplings and transformations chosen from a flexible class of invariances, propose algorithms to solve it, and show promising results in various tasks, including a popular unsupervised word translation benchmark.}

}

Gromov-Wasserstein Alignment of Word Embedding Spaces

David Alvarez-Melis, Tommi S. Jaakkola

EMNLP'18: Empirical Methods in Natural Language Processing. 2018. Oral Presentation.

@InProceedings{alvarezmelis2018gromov,

author = {Alvarez-Melis, David and Jaakkola, Tommi},

title = {Gromov-Wasserstein Alignment of Word Embedding Spaces},

booktitle = {Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing},

year = {2018},

publisher = {Association for Computational Linguistics},

pages = {1881--1890},

location = {Brussels, Belgium},

url = {http://aclweb.org/anthology/D18-1214}

}

Structured Optimal Transport

David Alvarez-Melis, Tommi S. Jaakkola, Stefanie Jegelka

AISTATS'18: Artificial Intelligence and Statistics. 2018. Oral Presentation.

Earlier version at NIPS Workshop on Optimal Transport for Machine Learning, 2017, as Extended Oral.

@InProceedings{pmlr-v84-alvarez-melis18a,

title = {Structured Optimal Transport},

author = {David Alvarez-Melis and Tommi Jaakkola and Stefanie Jegelka},

booktitle = {Proceedings of the Twenty-First International Conference on Artificial Intelligence and Statistics},

pages = {1771--1780},

year = {2018},

editor = {Amos Storkey and Fernando Perez-Cruz},

volume = {84},

series = {Proceedings of Machine Learning Research},

address = {Playa Blanca, Lanzarote, Canary Islands},

month = {09--11 Apr},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v84/alvarez-melis18a/alvarez-melis18a.pdf},

url = {http://proceedings.mlr.press/v84/alvarez-melis18a.html},

abstract = {Optimal Transport has recently gained interest in machine learning for applications ranging from domain adaptation to sentence similarities or deep learning. Yet, its ability to capture frequently occurring structure beyond the "ground metric" is limited. In this work, we develop a nonlinear generalization of (discrete) optimal transport that is able to reflect much additional structure. We demonstrate how to leverage the geometry of this new model for fast algorithms, and explore connections and properties. Illustrative experiments highlight the benefit of the induced structured couplings for tasks in domain adaptation and natural language processing.}

}

Interpretable Distribution Shift Detection using Optimal Transport

Neha Hulkund, Nicolo Fusi, Jennifer Wortman Vaughan, David Alvarez-Melis

DataPerf Workshop at ICML 2022

From Human Explanation to Model Interpretabilty: A Framework Based on Weight of Evidence

David Alvarez-Melis, Harmanpreet Kaur, Hal Daumé III, Hanna Wallach, Jennifer Wortman Vaughan

HCOMP '21: The 9th AAAI Conference on Human Computation and Crowdsourcing. 2021.

Weight of Evidence as a Basis for Human-Oriented Explanations

David Alvarez-Melis, Hal Daumé III, Jennifer Wortman Vaughan, Hanna Wallach

HCML @ NeurIPS2019

Functional Transparency for Structured Data: a Game-Theoretic Approach,

Guang-He Lee, Wengong Jin, David Alvarez-Melis, Tommi S. Jaakkola

ICML'19: International Conference on Machine Learning.

@InProceedings{pmlr-v97-lee19b,

title = {Functional Transparency for Structured Data: a Game-Theoretic Approach},

author = {Lee, Guang-He and Jin, Wengong and Alvarez-Melis, David and Jaakkola, Tommi},

booktitle = {Proceedings of the 36th International Conference on Machine Learning},

pages = {3723--3733},

year = {2019},

editor = {Chaudhuri, Kamalika and Salakhutdinov, Ruslan},

volume = {97},

series = {Proceedings of Machine Learning Research},

address = {Long Beach, California, USA},

month = {09--15 Jun},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v97/lee19b/lee19b.pdf},

url = {http://proceedings.mlr.press/v97/lee19b.html},

abstract = {We provide a new approach to training neural models to exhibit transparency in a well-defined, functional manner. Our approach naturally operates over structured data and tailors the predictor, functionally, towards a chosen family of (local) witnesses. The estimation problem is setup as a co-operative game between an unrestricted \emph{predictor} such as a neural network, and a set of \emph{witnesses} chosen from the desired transparent family. The goal of the witnesses is to highlight, locally, how well the predictor conforms to the chosen family of functions, while the predictor is trained to minimize the highlighted discrepancy. We emphasize that the predictor remains globally powerful as it is only encouraged to agree locally with locally adapted witnesses. We analyze the effect of the proposed approach, provide example formulations in the context of deep graph and sequence models, and empirically illustrate the idea in chemical property prediction, temporal modeling, and molecule representation learning.}

}

Towards Robust Interpretability with Self-Explaining Neural Networks

David Alvarez-Melis, Tommi S. Jaakkola

NeurIPS'18: Neural Information Processing Systems. 2018.

@incollection{NIPS2018_8003,

title = {Towards Robust Interpretability with Self-Explaining Neural Networks},

author = {Alvarez Melis, David and Jaakkola, Tommi},

booktitle = {Advances in Neural Information Processing Systems 31},

editor = {S. Bengio and H. Wallach and H. Larochelle and K. Grauman and N. Cesa-Bianchi and R. Garnett},

pages = {7786--7795},

year = {2018},

publisher = {Curran Associates, Inc.},

url = {http://papers.nips.cc/paper/8003-towards-robust-interpretability-with-self-explaining-neural-networks.pdf}

}

Game-theoretic Interpretability for Temporal Modeling

Guang-He Lee, David Alvarez-Melis, Tommi S. Jaakkola

Fairness, Accountability, and Transparency in Machine Learning (@ICML 2018).

On the Robustness of Interpretability Methods

David Alvarez-Melis, Tommi S. Jaakkola

Workshop on Human Interpretability in Machine Learning (@ICML 2018).

A Causal Framework for Explaining the Predictions of Black-Box Sequence-to-Sequence Models

David Alvarez-Melis, Tommi S. Jaakkola

EMNLP'17: Empirical Methods in Natural Language Processing. 2017.

- MIT News: How Neural Networks think.

@InProceedings{alvarezmelis2017causal,

author = {Alvarez-Melis, David and Jaakkola, Tommi},

title = {A causal framework for explaining the predictions of black-box sequence-to-sequence models},

booktitle = {Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing},

month = {September},

year = {2017},

address = {Copenhagen, Denmark},

publisher = {Association for Computational Linguistics},

pages = {412--421},

url = {https://www.aclweb.org/anthology/D17-1042}

}

Optimal Transport in Structured Domains: Algorithms and Applications

David Alvarez-Melis (advisor: Tommi S. Jaakkola)

PhD Thesis, MIT. 2019.

The Matrix Multiplicative Weights Algorithm for Domain Adaptation

David Alvarez-Melis (advisor: Mehryar Mohri)

MS Thesis, Courant Institute. 2013.

Lax-Milgram's Theorem: Generalizations and Applications

David Alvarez-Melis (advisor: Carlos Bosch Giral)

BSc Thesis, ITAM. 2011.

To Backtrack or Not to Backtrack: When Sequential Search Limits Model Reasoning

Sunny Qin, David Alvarez-Melis*, Samy Jelassi*, Eran Malach*

COLM'25: Conference on Language Modeling. 2025.

What is the Right Notion of Distance between Predict-then-Optimize Tasks?

Paula Rodriguez-Diaz, Lingkai Kong, Kai Wang, David Alvarez-Melis, Milind Tambe

UAI'25: Uncertainty in Artificial Intelligence. 2025.

What is the Right Notion of Distance between Predict-then-Optimize Tasks? | OpenReview

DDEQs: Distributional Deep Equilibrium Models through Wasserstein Gradient Flows

Jonathan Geuter, Clément Bonet, Anna Korba, David Alvarez-Melis.

AISTATS'25: International Conference on Artificial Intelligence and Statistics . 2025.

Mixture of Parrots: Experts improve memorization more than reasoning

Samy Jelassi, Clara Mohri, David Brandfonbrener, Alex Gu, Nikhil Vyas, Nikhil Anand, David Alvarez-Melis, Yuanzhi Li, Sham M. Kakade, Eran Malach

ICLR'25: International Conference on Learning Representations. 2025.

A Label is Worth A Thousand Images in Dataset Distillation

Tian Qin, Zhiwei Deng, David Alvarez-Melis

NeurIPS'24: Neural Information Processing Systems. 2024.

@inproceedings{NEURIPS2024_ee45939f,

author = {Qin, Tian and Deng, Zhiwei and Alvarez-Melis, David},

booktitle = {Advances in Neural Information Processing Systems},

editor = {A. Globerson and L. Mackey and D. Belgrave and A. Fan and U. Paquet and J. Tomczak and C. Zhang},

pages = {131946--131971},

publisher = {Curran Associates, Inc.},

title = {A Label is Worth A Thousand Images in Dataset Distillation},

url = {https://proceedings.neurips.cc/paper_files/paper/2024/file/ee45939f4403e8abd63a15a29a9c055b-Paper-Conference.pdf},

volume = {37},

year = {2024}

}

Tag-LLM: Repurposing General-Purpose LLMs for Specialized Domains

Junhong Shen, Neil Tenenholtz, James Brian Hall, David Alvarez-Melis, Nicolo Fusi

ICML'24: International Conference on Machine Learning. 2024.

@InProceedings{pmlr-v235-shen24f,

title = {Tag-{LLM}: Repurposing General-Purpose {LLM}s for Specialized Domains},

author = {Shen, Junhong and Tenenholtz, Neil and Hall, James Brian and Alvarez-Melis, David and Fusi, Nicolo},

booktitle = {Proceedings of the 41st International Conference on Machine Learning},

pages = {44759--44773},

year = {2024},

editor = {Salakhutdinov, Ruslan and Kolter, Zico and Heller, Katherine and Weller, Adrian and Oliver, Nuria and Scarlett, Jonathan and Berkenkamp, Felix},

volume = {235},

series = {Proceedings of Machine Learning Research},

month = {21--27 Jul},

publisher = {PMLR},

pdf = {https://raw.githubusercontent.com/mlresearch/v235/main/assets/shen24f/shen24f.pdf},

url = {https://proceedings.mlr.press/v235/shen24f.html},

abstract = {Large Language Models (LLMs) have demonstrated remarkable proficiency in understanding and generating natural language. However, their capabilities wane in highly specialized domains underrepresented in the pretraining corpus, such as physical and biomedical sciences. This work explores how to repurpose general LLMs into effective task solvers for specialized domains. We introduce a novel, model-agnostic framework for learning custom input tags, which are parameterized as continuous vectors appended to the LLM’s embedding layer, to condition the LLM. We design two types of input tags: domain tags are used to delimit specialized representations (e.g., chemical formulas) and provide domain-relevant context; function tags are used to represent specific functions (e.g., predicting molecular properties) and compress function-solving instructions. We develop a three-stage protocol to learn these tags using auxiliary data and domain knowledge. By explicitly disentangling task domains from task functions, our method enables zero-shot generalization to unseen problems through diverse combinations of the input tags. It also boosts LLM’s performance in various specialized domains, such as predicting protein or chemical properties and modeling drug-target interactions, outperforming expert models tailored to these tasks.}

}

Generating Synthetic Datasets by Interpolating along Generalized Geodesics

Jiaojiao Fan, David Alvarez-Melis

UAI'23: Uncertainty in Artificial Intelligence. 2023

@INPROCEEDINGS{fan2023generating,

title = "Generating Synthetic Datasets by Interpolating along

Generalized Geodesics",

booktitle = "Proceedings of the {Thirty-Ninth} Conference on Uncertainty in

Artificial Intelligence",

author = "Fan, Jiaojiao and Alvarez-Melis, David",

publisher = "Proceedings of Machine Learning Research",

year = 2023,

conference = "Uncertainty in Artificial Intelligence"

}

InfoOT: Information Maximizing Optimal Transport

Ching-Yao Chuang, Stefanie Jegelka, David Alvarez-Melis

ICML'23: International Conference on Machine Learning. 2023.

@INPROCEEDINGS{chuang2023infoot,

title = "{InfoOT}: Information Maximizing Optimal Transport",

booktitle = "Proceedings of the 40th International Conference on Machine

Learning",

author = "Chuang, Ching-Yao and Jegelka, Stefanie and Alvarez-Melis,

David",

editor = "Krause, Andreas and Brunskill, Emma and Cho, Kyunghyun and

Engelhardt, Barbara and Sabato, Sivan and Scarlett, Jonathan",

publisher = "PMLR",

volume = 202,

pages = "6228--6242",

series = "Proceedings of Machine Learning Research",

institution = "PMLR",

year = 2023

}

Domain Adaptation using Optimal Transport for Invariant Larning using Histopathology datasets

Kianoush Falahkheirkhah, Alex Lu, David Alvarez-Melis, and Grace Huynh

Medical Imaging in Deep Learning (MIDL). 2023.

Neural Unbalanced Optimal Transport via Cycle-Consistent Semi-Couplings

Frederike Lübeck*, Charlotte Bunne*, Gabriele Gut, Jacobo Sarabia del Castillo, Lucas Pelkmans, David Alvarez-Melis